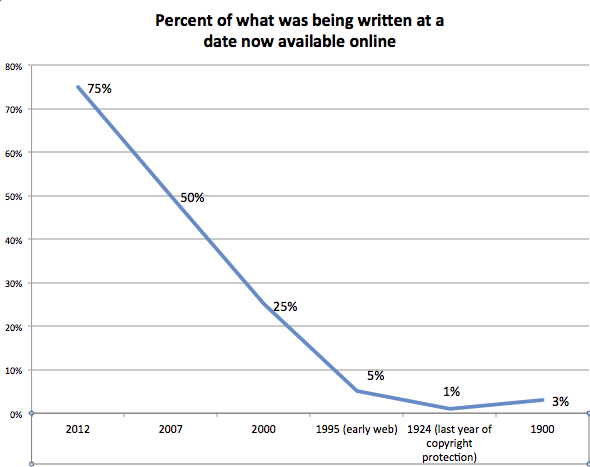

Given the huge amount of data now available online, I am having great difficulty persuading my journalism students of the value of looking elsewhere (for example a library). One way to do so I thought might be to show them how little of what has been written in the pre and early web era is currently available online. I don’t have a good source of data to hand about this so I just put together this graph pulling figures out of my head– can anyone volunteer a better source of data for this? Someone from Google Books perhaps? [Update – Jerome McDonough came up with a great response which I have pasted below this graph]

If the question is restated as what percentage of standard, published books, newspapers and journals are not available via open-access on the web, the answer is pretty straightforward: an extremely small percentage. Some points you can provide your students:

* The Google Books Project has digitized about 20 million volumes (as of last March); they estimate the total number of books ever published at about 130 million, so obviously the largest comprehensive scanning operation for print has only handled about 15% of the world’s books by their own admission.

* The large majority of what Google has scanned is still in copyright, since the vast majority of books are still in copyright — the 20th century produced a huge amount of new published material. An analysis of library holdings in WorldCat in 2008 showed that about 18% of library holdings were pre-1923 (and hence in the public domain). Assuming similar proportions hold for Google, they can only make full view of texts available for around 3.6 million books. That’s a healthy number of books, but obviously a small fraction of 130 million, and more importantly, you can’t look at most of the 20th century material, which is going to be the stuff of greatest interest to journalists. You might look at the analysis of Google Books as a research collection by Ed Jones (http://www.academia.edu/196028/Google_Books_as_a_General_Research_Collection) for more discussion of this. There’s also an interesting discussion of rights issues around the HathiTrust collection that John Price Wilkin did you might be interested in : http://www.clir.org/pubs/ruminations/01wilkin [I wonder what the situation is like for Amazon’s quite extensive “Look inside the book” programme?]

As for newspapers, I think if you look at the Library of Congress’s information on the National Digital Newspaper Program at http://chroniclingamerica.loc.gov/about/ you’ll see a somewhat different problem. LC is very averse to anything that might smack of copyright violation, so the vast majority of its efforts are focused on digitization of older, out-of-copyright material. A journalist trying to do an article on news-worthy events of 1905 in the United States is going to find a lot more online than someone trying to find information about 1993.

Now, the above having been said, a lot of material is available *commercially* that you can’t get through Google Books or library digitization programs trying to stay on the right side of fair use law in the U.S. If you want to pay for access, you can get at more. But even making that allowance, I suspect there is more that has never been put into digital format than there is available either for free or for pay on the web at this point. But I have to admit, trying to get solid numbers on that is a pain.

[Thanks again to Jerome, and thanks to Lois Scheidt for passing my query on around her Library Science friends…]